Make assumptions explicit, and then refine them

AI models don’t “think” in the human sense. But they do embed assumptions—lots and lots of them. Hidden priors, structural defaults, causal heuristics, statistical norms. Often, when you ask the AI a question and it responds with confidence, what it’s really doing is applying a stack of unspoken assumptions to your input.

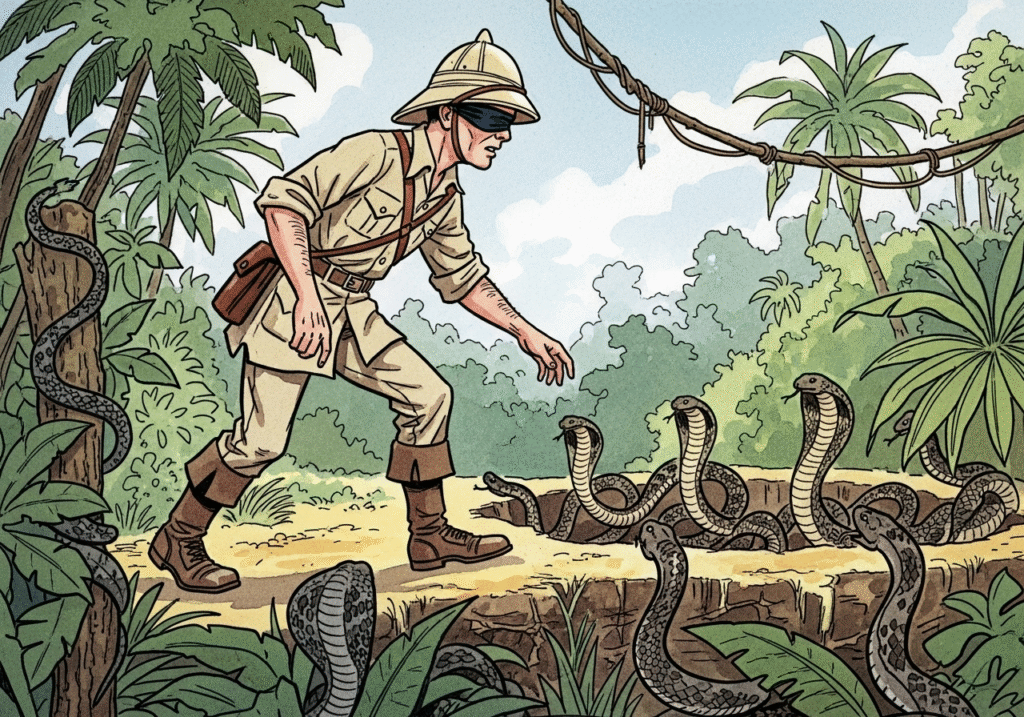

If you don’t know what those assumptions are, you’re flying blind. That’s where this skill comes in: you force the disclosure of underlying assumptions. Instead of taking the AI’s output at face value, you train yourself to interrogate the scaffolding underneath the answer. Ask: “Why this? Under what assumptions? According to what logic?” It is the only way to surface precision and rigor.

Let’s walk through an example. You ask: What would be the likely economic impact of implementing a universal basic income in the U.S.?” You’ll get a long, confident, tidy-sounding answer. Probably a mix of cost estimates, productivity effects, incentives to work, and maybe some international analogies.

But behind that synthesis is a tangle of unspoken choices: What does it assume about government efficiency? How does the AI model labor market elasticity? What does it assume about government efficiency? Which UBI experiments (e.g. Finland, Stockton, Kenya) does it privilege, and which does it ignore? Does it assume full replacement of other welfare programs, or a supplement? These are not small differences. They each materially change the nature of the analysis, the framework created to process, and–ultimately–the conclusion.

So, the move is simple: don’t stop at the answer. Your follow-ups might look like:

- “List the key assumptions required to support your analysis above.”

- “What economic models or historical analogies are implicitly being relied on?”

- “Which of your assumptions, if changed, would most affect your conclusion?”

The AI will often respond with a surprisingly clean unpacking: “This conclusion assumes that the UBI would be funded through progressive taxation, that it would replace rather than supplement existing welfare programs, and that recipients would not significantly reduce their labor supply…”

Now you’re in business. You can pick those assumptions apart. You can flip one: “Now rerun the analysis assuming that labor force participation declines by 15% among low-wage earners.” Or challenge its priors: “Why are you treating the Finnish UBI experiment as more representative than the Kenyan pilot?”

What this skill gives you is epistemic leverage. You no longer accept answers passively—you treat every output as a surface layer, behind which lies a tangle of logic, evidence, assumption, and probabilistic belief that hasn’t been articulated. Now you know how to tease that out.

The goal isn’t to catch the AI in a mistake. The goal is to treat the analysis like a living model—one that has priors, theories, and frames, just like that of any economist, scientist, or strategist. And just like those experts, it has blind spots.

There’s an old line in epistemology: He who asks the questions controls the frame. With AI, this is literally true. And if you don’t ask it to expose its frame, it will decide for you.

And it may have something entirely other in its mind than you did in yours. Clarify your assumptions and you clarify your analysis. This is a truism that applies to all analyses, but it’s doubly so with AI-assisted analysis: you cannot be certain that you’re sharing a common framework until you surface and–if necessary–change it or refine it.